Artificial intelligence should complement human healthcare providers, not replace them.

Image source:Ümit Bulut/Unsplash

Hailey Fowler

Oliver Wyman Health & Life Sciences Business Partner

John Lester

Oliver Wyman Partner

Approximately 85% of people with mental health issues do not receive treatment, often due to a shortage of healthcare providers.

Artificial intelligence can be used to enhance mental health care and help address the shortage of providers.

To maximize the benefits of generative AI, it should complement human creators rather than replace them.

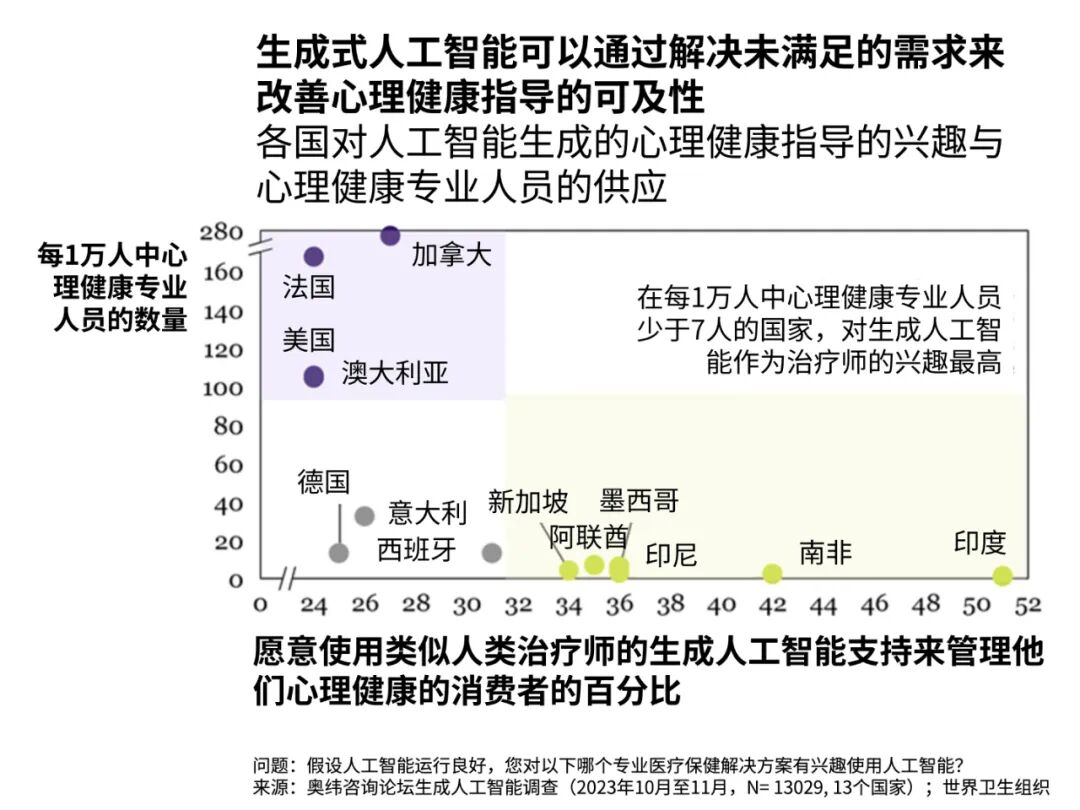

Mental health is a major global public health issue. According to the World Health Organization, before the COVID-19 pandemic, approximately 1 billion people worldwide were living with mental health or substance use disorders. The pandemic has exacerbated this problem, driving up rates of depression and anxiety by 25% to 27%.Meanwhile, researchers from Harvard Medical School and the University of Queensland have found that roughly half of the global population is expected to develop a mental health disorder at some point in their lives.Exacerbating this issue is the shortage of qualified professionals to treat patients. According to the World Health Organization's Mental Health Atlas, there are only 13 mental health workers per 100,000 people worldwide.The gap between advanced and less-developed economies is particularly striking. In high-income countries, the number of mental health workers can be up to 40 times higher than in low-income nations.A research report published in the *International Journal of Mental Health Systems* highlights that workforce shortages severely limit people's access to mental health services, particularly in low- and middle-income countries, leaving about 85% of individuals with mental disorders untreated.The supply-demand gap highlights the urgent need for alternative solutions. Just as telemedicine has expanded treatment options for many illnesses, artificial intelligence holds the potential to make mental health care more accessible to a growing number of patients. According to Oliver Wyman’s report, "How Generative AI Is Transforming Business and Society," which surveyed 16,000 people across 16 countries, many patients are eager to explore AI-driven therapies as a viable treatment option.The applicability of AI tools will depend on the severity of the patient’s condition—both in terms of the underlying diagnosis and the symptoms they are experiencing at a given moment. Yet, in many cases, AI can significantly improve access to emergency care. It can also be used to analyze data and assist clinicians in treating patients in real time, delivering more personalized insights and guidance.The Rise of AI-Based Mental Health TherapyArtificial intelligence offers a wide range of applications in mental health treatment. AI can integrate and leverage insights from diverse sources, such as medical textbooks, research papers, electronic health record systems, and clinical literature, to assist mental health professionals in recommending therapeutic approaches and predicting patients' responses.Meanwhile, self-diagnosis apps, chatbots, and other conversational therapy tools powered by generative AI have reduced barriers to access for patients with milder conditions.Many consumers are open to trying AI tools for managing their mental health. In fact, 32% of Oliver Wyman survey respondents said they’d be interested in using AI instead of human therapists. Among high-end respondents, 51% of Indians expressed willingness to try AI-generated therapies, while this figure dropped to just 24% in the U.S. and France.In countries with a limited number of mental health professionals per capita, interest is highest in accessing human-like therapists to help manage mental health—highlighting AI's potential to expand the reach of mental health services, particularly in emerging markets.Generative AI can enhance access to mental health guidance by addressing unmet needs.

Image source:Oliver Wyman Consulting

Younger generations are more eager to leverage technology for this purpose—36% of Gen Z and Millennials say they’re interested in using AI for mental health, compared to just 28% among other age groups.This generational gap mirrors broader trends in mental health prevalence and attitudes: according to another report by Oliver Wyman, Generation Z is twice as likely as non-Z generations to experience mental health issues—and consequently, twice as likely to seek treatment. Their proactive approach and strong interest in leveraging AI could very well drive the widespread adoption of AI-powered mental health tools in the future.Although generative AI lacks genuine emotions, consumers still perceive it as a trustworthy emotional companion—perhaps because it’s always available and continuously "replicates" empathy by identifying patterns in the underlying data.Research shows that more than five times as many respondents—compared to humans—said generative AI makes them feel they have a trustworthy confidant with whom they can share personal thoughts and seek life advice. Among the four-fifths who admitted preferring AI in at least one situation, about 15% even expressed that they believe AI demonstrates greater emotional intelligence than humans do.Proceed with caution when using AI-assisted care.However, before deploying AI tools, it’s essential to assess the risks associated with this technology to ensure that care remains safe and effective. Importantly, AI-assisted mental health care should be viewed as part of a multi-faceted approach—rather than a direct replacement for human-to-human interactions.It’s important to recognize that the severity of a patient’s condition can sometimes fluctuate even within the same episode. Before deploying AI tools, it’s essential to understand the full spectrum of behavioral health conditions. By leveraging this knowledge, healthcare systems can deliver more personalized care.Additionally, digital health companies must integrate features that direct patients to human resources when the app detects a worsening medical condition. The goal is to reduce the risk of patients following incorrect advice—advice that could potentially lead to self-harm.Confidentiality and security are another critical issue. Data can—and often is—sold for marketing purposes, and global regulators are issuing warnings to AI companies and firms that provide AI technologies.The U.S. Federal Trade Commission has warned that it will file lawsuits against companies that loosen their data policies to gain deeper access to customer information. Meanwhile, EU member states unanimously approved the AI Act earlier this year, establishing stringent new standards for regulating artificial intelligence—and imposing hefty penalties on those who fail to comply.Beyond data mining and privacy concerns, security remains the top priority for all healthcare institutions. Healthcare is a prime target for cybercriminals, and consumers must be fully aware of the risks before downloading apps onto their devices. Recognizing and addressing these vulnerabilities will ensure that generative AI is used safely, safeguarding patients and protecting their right to confidential care.To maximize the benefits of technology, generative AI should complement human caregivers rather than replace them. Ultimately, the collaborative relationship between AI and human caregivers will enable mental health professionals to focus more deeply on compassionate, personalized care—enhancing patient outcomes, accessibility, and efficiency in the process.

The above content solely represents the author's personal views.This article is translated from the World Economic Forum's Agenda blog; the Chinese version is for reference purposes only.Feel free to share this on WeChat Moments; please leave a comment below the post if you’d like to republish.

Editor: Wang Can

The World Economic Forum is an independent and neutral platform dedicated to bringing together diverse perspectives to discuss critical global, regional, and industry-specific issues.

Follow us on Weibo, WeChat Video Accounts, Douyin, and Xiaohongshu!

"World Economic Forum"