Some countries are stepping up efforts to protect social media users from harmful content.

Image source:Reuters / Elizabeth Frantz

John Letzing

Digital Editor, World Economic Forum

The arrest of Telegram's founder and CEO has prompted heightened scrutiny of how content is currently moderated on social media platforms.

An expert said, "The coming years will be challenging," as balancing the need to strengthen safeguards with the demand for free speech becomes increasingly critical.

Some countries are focusing on researching ways to enhance transparency and strengthen protection measures.

Last October, Hamas launched a surprise attack on Israel, and shortly afterward, the terrorist group’s Telegram channel was flooded with images of the atrocities.What was Telegram's response to this? These channels were restricted, but reportedly, the impact has been extremely limited.Telegram has consistently strived to cultivate its reputation as a bastion of free speech, at times even taking its defense of freedom to extreme measures. Yet nearly a year later, that very reputation has been shaken by the arrest of its founder and CEO in Paris.Pavel Durov has been forced to remain in France as he faces investigation over alleged involvement in criminal activities on his widely popular platform. In a post on his channel, he wrote: "I’ve been questioned by the police for four days. If innovators knew they might personally be held accountable for the potential misuse of the tools they create, they’d never develop anything new in the first place."The sudden legal scrutiny raises a key question: How do these platform services typically handle content moderation? In other words, how do people expect them to carry out this process?As with many other aspects of our increasingly automated lives, humans still play a central role. Office workers in cubicles, staring at screens and swiftly making content-review decisions—tasks that could already be handled by AI and machine learning—may have been replaced. Yet despite this shift, these overworked, often undervalued individuals remain the indispensable final line of defense.However, Sasha Havlicek, CEO of the Strategic Dialogue Institute, believes that people are gradually losing patience with the way things are currently being done. She said, "Content moderation across all platforms continues to suffer from persistent, systemic failures."Content moderation is no easy task.

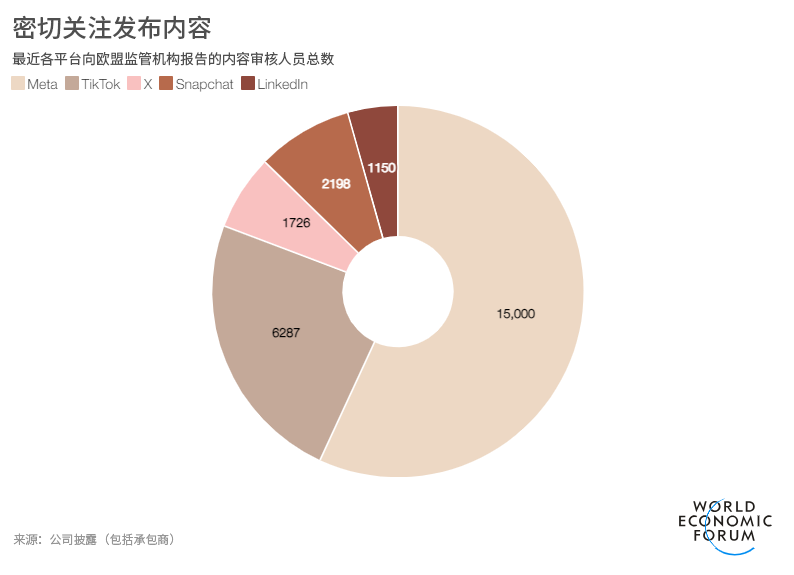

Image source:World Economic Forum

There's a reason content moderation is often called the most challenging problem on the internet.In many ways, this mechanism has been overlooked—yet in reality, it serves as a final safeguard for certain businesses, as these companies strive to attract large audiences and, in the process, aim to elicit the strongest possible reactions from them. Meanwhile, when it comes to the issue of "freedom of expression," which philosophers have pondered for centuries, moderators may be expected to deliver immediate judgments.Of course, some decisions are simply easier than others.Havlicek referred to activities that are clearly illegal and harmful as the "cutting edge" of the issue, while contrasting them with the "gray area"—situations that are "bad but legal," which are far more challenging to regulate. Despite this, Havlicek noted that in many parts of the world, particularly in non-English-speaking countries, even these "cutting-edge" issues often manage to pass scrutiny."Currently, the situation is quite interesting—aside from the U.S., the Five Eyes alliance and the EU are essentially moving toward regulation." She referred to the five English-speaking countries that collaborate on intelligence-gathering efforts (Australia, Canada, New Zealand, the UK, and the U.S.).But Havlicek warned, "Overly stoking the flames" among those absolute champions of free speech "is dangerous," as they’ve already come to view efforts to regulate social media as oppressive censorship—making the coming years particularly challenging.The lack of skilled content moderators seems like an issue that could be easily resolved.Among the largest social media platforms disclosing information to comply with the EU’s Digital Services Act—legislation that, according to Havlicek, could “change the game” despite being relatively new—figures include the number of moderators proficient in local languages. For instance, X’s latest report reveals that 1,535 moderators are fluent in English, 29 speak Spanish, 2 are skilled in Italian, and 1 has expertise in Polish.Havlicek said, "Even with 20 content moderators in each country, it still feels a bit insufficient. These are currently the best markets for content moderation services, so you can imagine how things stand elsewhere."The ideal candidate for automation?Today, the psychological pressure faced by content moderators is well-known—so much so that it has even inspired a Broadway play. According to Playbill, the protagonist of "JOB" is a troubled woman who "must remove some of the most disturbing and bafflingly vile content from the internet."Havlicek said, "The people currently doing this job aren't receiving the compensation or protection they deserve."Auditors have already filed relevant lawsuits in multiple countries. The sensational descriptions featured in news reports have been circulating for at least a decade. Havlicek’s organization is collaborating with governments and platforms worldwide to chart a safer, more stable path forward—and the group itself has a dedicated team actively conducting related research. Additionally, the organization has established strict timelines for its research projects and mandates access to psychological counseling services.All of this seems to make content moderation a natural fit for automation. However, for more complex tasks—such as identifying ironic tones or conducting in-depth analyses of specific political situations—skilled human operators are still essential.Looking at the bigger picture, Havlicek says, this isn’t just about removing or flagging problematic content—it’s fundamentally a problem with the content management system itself. In other words, it’s about how the algorithms designed to attract users and boost ad revenue are distorting the environment, pushing people toward increasingly "extreme" spaces.As geopolitical tensions continue to escalate and the election remains unresolved, this issue is particularly troubling. What if spreading misinformation simply requires amplifying voices that already exist? The U.S. Department of Justice recently accused a company of being suspected of exactly that in its latest indictment.Havlicek said that at this point, platforms already have considerable experience in identifying "information operations by state actors," but they still struggle to keep up with the ever-evolving strategies.Havlicek said that well-established measures like the EU's Digital Services Act could be highly beneficial. A key aspect of the act is allowing independent researchers access to platform data—this move aims to enhance transparency and enable a more thorough assessment of risks.She said, "We need to prove that legislation like the Digital Services Act is feasible."Only online platforms deemed "very large" are required to comply with the bill. Service providers should be accountable for user safety, Havlicek said, "especially when their user base is big enough to impact society as a whole."Telegram hasn’t quite achieved this yet, but it’s already getting very close.Many people believe that if moderation is done effectively, no one will even notice—Telegram is one of them. Durov wrote on his channel: "Some media outlets claim Telegram is some kind of anarchist paradise, but that’s absolutely not true. In fact, we remove millions of harmful posts and channels every day."Telegram recently posted a job advertisement for content moderators, requiring candidates to demonstrate strong analytical skills and "quick response capabilities."About five months before his arrest, Durov stated that content moderation across "all major social media platforms" was "prone to criticism." He pledged that Telegram would address the issue in a way that is "efficient, innovative, and respectful of privacy and freedom of speech."After his arrest, he wrote: "Finding the right balance between privacy and security is no easy task." But his company will remain true to its principles.The next day, he announced that Telegram had reached 10 million paying users, adding a celebratory emoji to the statement.

The above content solely represents the author's personal views.This article is translated from the World Economic Forum's Agenda blog; the Chinese version is for reference purposes only.Feel free to share this in your WeChat Moments; please leave a comment below if you'd like to republish.

Translated by: Sun Qian | Edited by: Wang Can

The World Economic Forum is an independent and neutral platform dedicated to bringing together diverse perspectives to discuss critical global, regional, and industry-specific issues.

Follow us on Weibo, WeChat Video Accounts, Douyin, and Xiaohongshu!

"World Economic Forum"