Lessons learned from addressing racial bias in healthcare algorithms can and should guide other fields in achieving data equity.

Image source:Unsplash/Hush Naidoo Jade

JoAnn Stonier

Mastercard Data and AI Researcher

Lauren Woodman

DataKind CEO

Karla Yee Amezaga

Head of Data Policy at the World Economic Forum

Historical algorithmic biases in healthcare highlight the urgent need for data equity.

A study found that addressing racial bias in healthcare could increase the proportion of Black patients receiving additional care from 17.7% to 46.5%.

Now, the Global Future Council on Data Equity at the World Economic Forum has unveiled a new framework aimed at guiding industries in building more equitable data systems.

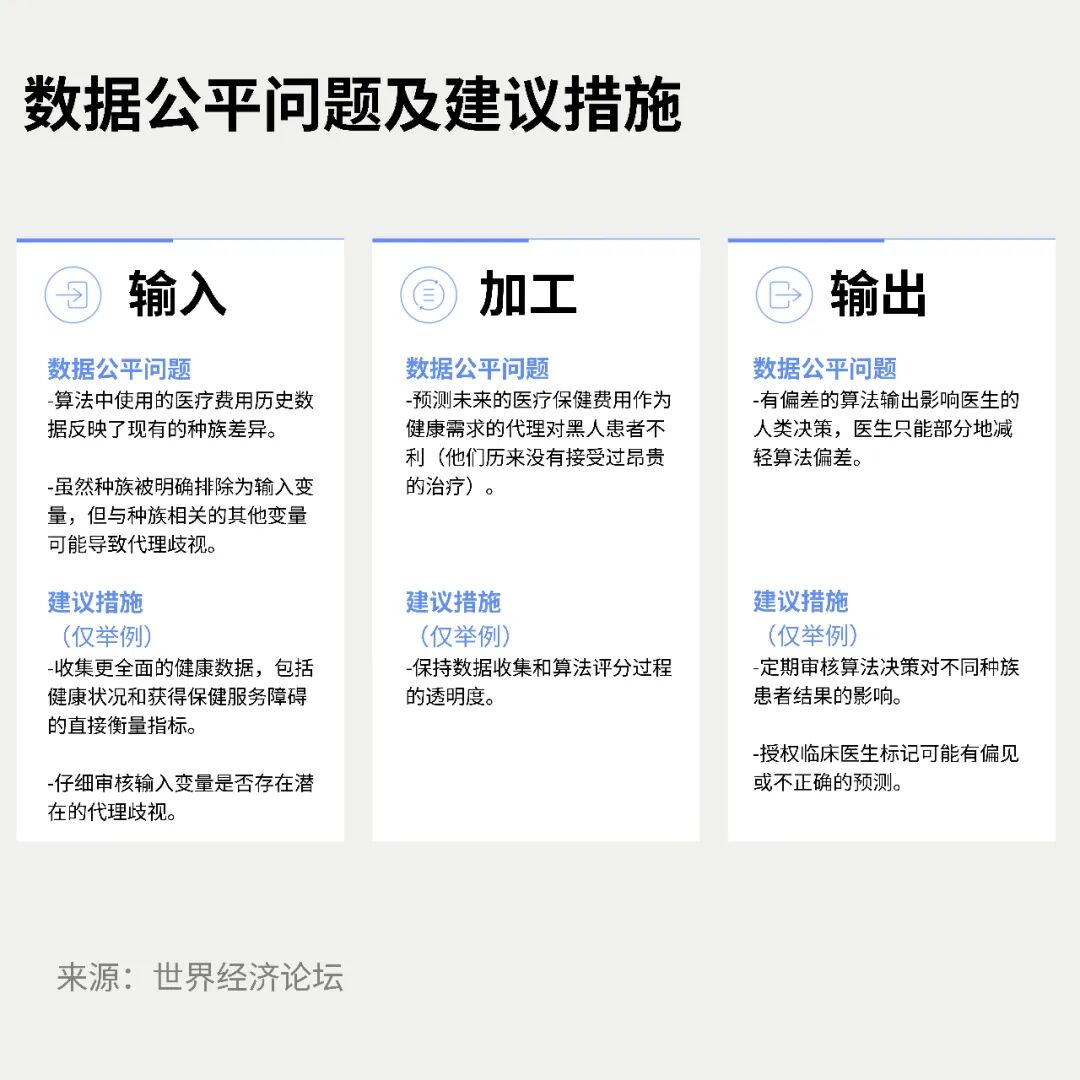

In an era where data-driven decision-making increasingly shapes the world, ensuring the fairness and impartiality of these systems has never been more critical. Training AI models and other data systems can sometimes lead to historical biases creeping into the outputs of even the most advanced systems.A report by the World Economic Forum’s Global Future Council on Data Equity introduces a new framework for defining and implementing data fairness, aiming to address the risk of old biases being perpetuated by emerging technologies.Hidden Bias in Healthcare AlgorithmsAn analysis of the algorithms used in the U.S. healthcare system serves as a striking example of this bias. The study reveals that the algorithm systematically disadvantages Black patients who require complex care.The root cause lies in the data used to train the algorithm, which reflects the reality that, due to income inequality and other barriers to healthcare, Black patients have historically received less-expensive treatments.The study concluded that correcting this bias could increase the proportion of Black patients receiving additional care from 17.7% to 46.5%. This case underscores the urgent need for rigorous algorithmic audits and cross-departmental collaboration to eliminate such biases from decision-making processes.Defining Data Fairness: A Shared ResponsibilityCurrently, there is no universally recognized standard or definition for data fairness worldwide. To further address the issue of bias, the Global Data Equity Future Commission at the World Economic Forum has developed a comprehensive definition:“Data equity can be defined as the shared responsibility of upholding and promoting fair data practices that respect and advance human rights, opportunities, and dignity.”Data equity is a fundamental responsibility that calls for strategic, participatory, inclusive, and proactive collective action—working together to build a data-driven system that ensures fair, just, and beneficial outcomes for individuals, groups, and communities worldwide. It recognizes that data practices—including the collection, organization, processing, retention, analysis, management, and the responsible application of insights derived from data—have profound implications for human rights, as well as for access to and control over societal, economic, environmental, and cultural resources and opportunities.This definition highlights a crucial point: data equity isn’t just about the numbers—it’s about how those numbers shape people’s real lives. It encompasses the entire journey of data, from how it’s collected and processed to how it’s used—and ultimately, who benefits from the insights it provides.Achieving data equity requires a structured approach. The Data Equity Framework developed by the Global Future Council provides tools for reflection, research, and action. It is built on three key pillars: data, people, and purpose.The data fairness framework is built around three core principles.

Image source:Global Future Data Equity Commission

The framework's three core pillars are as follows:- The nature of the data:This pillar focuses on the sensitivity of information and who is authorized to access it. For instance, health records are highly sensitive and require strict protection.

- Purpose of data usage:This pillar aims to consider factors such as credibility, value, originality, and applicability. Is the use of data ethical? Does it truly deliver value to society?

- Personnel involved:This involves the relationship between data collectors, processors, and data subjects, as well as the responsibilities associated with data handling. It also takes into account the expertise of the individuals processing the data—and, crucially, who ultimately bears responsibility for how the data is used.

Putting theory into practiceIf we return to the previously discussed example of healthcare data, we can see how these principles apply. The Global Future Council’s report offers the following initial recommendations to eliminate bias.- Input stage:Collect more comprehensive health data, including direct measurements of health status and barriers to healthcare. Review input variables for potential proxy discrimination.

- Process stages:Maintain transparency in the data collection and algorithmic scoring processes.

- Output stage:Regularly audit how algorithmic decisions impact outcomes for patients from different racial groups. This enables clinicians to identify predictions that may contain bias or inaccuracies.

Algorithm auditing to improve healthcare services.

Image source:World Economic Forum

Establishing a fairer data system is not a one-time fix—it’s an ongoing process that requires monitoring, collaboration, and a consistent, unwavering commitment to equity at every step. The lessons we’ve learned from addressing racial bias in healthcare algorithms can—and should—guide us as we work toward data fairness in other critical areas.

The above content solely represents the author's personal views.This article is translated from the World Economic Forum's Agenda blog; the Chinese version is for reference purposes only.Feel free to share this on WeChat Moments; please leave a comment below the post if you’d like to republish.

Editor: Wang Can

The World Economic Forum is an independent and neutral platform dedicated to bringing together diverse perspectives to discuss critical global, regional, and industry-specific issues.

Follow us on Weibo, WeChat Video Accounts, Douyin, and Xiaohongshu!

"World Economic Forum"