Inclusive AI must overcome language and cultural barriers across the globe.

Image source: Photo by NASA on Unsplash

Harrison Lung

Chief Strategy Officer of e& Group

Artificial intelligence must be built on inclusive and representative data—and it all starts with "access."

To achieve the sustainable and secure expansion of artificial intelligence, modernizing data infrastructure is essential.

Building inclusive AI requires collaborative governance—and, even more importantly, a sustained commitment to ethical design.

As artificial intelligence reshapes the global economy, one increasingly clear truth emerges: AI’s power, fairness, and insights all stem from the data it learns from. Yet today, data still can’t tell the full human story—especially in a world where nearly 2.6 billion people remain disconnected from the internet, meaning the datasets used to train AI have yet to capture the full diversity of human experience.

Take language as an example. Globally, there are over 7,000 languages spoken, yet most AI chatbots have been trained only on data from about 100 of these languages. According to the International advocacy group Center for Democracy & Technology, although English is used by fewer than 20% of the global population, it accounts for nearly two-thirds of all online content—and remains the dominant force driving large language models. This isn’t just a matter of inclusivity; it’s fundamentally a problem of incomplete data. Due to uneven levels of digitalization, many underrepresented languages lack structured digital content altogether, making it incredibly challenging for AI systems to learn from them.

The issue of missing data is not merely a technical oversight—it’s also a societal risk. Without deliberate design, artificial intelligence will continue to exclude large segments of the global population. This won’t only exacerbate existing inequalities but will also cause us to miss out on the invaluable perspectives offered by underrepresented groups.

To bridge this gap, we must fundamentally rethink how we build artificial intelligence. This means developing diverse tools and models that cater to the unique linguistic ecosystems of different languages. Regional language models like Jais, as well as open-source models such as Falcon, are excellent examples of efforts to capture the linguistic nuances and cultural distinctiveness of non-English-speaking communities—and to embed cultural relevance at the very heart of AI design.

The Starting Point for Inclusive AI: Enhancing Connectivity

Building inclusive AI starts with "access." Currently, one-third of the global population still lacks a stable internet connection, effectively making these groups "invisible" in the algorithms that power our economies. While 68% of the world’s population is now connected—5.5 billion people are online—and 5G coverage has reached 51% of the global population, the digital divide remains stark. In low-income countries, 5G penetration still stands at just 4%.

To bridge this gap, e& Group has committed to investing over $6 billion by 2026 to expand affordable internet access across 16 countries in the Middle East, Africa, and Asia. Yet enhancing connectivity isn’t the end—it’s just the beginning. As highlighted in our recent joint study with IBM, "AI Advantages in the MENA Region: Opportunities to Leapfrog and Lead," building a robust data infrastructure is a critical first step in ensuring that the "newly connected" populations are not only "seen, heard, and represented" within AI systems.

From Fragmentation to Unified Models

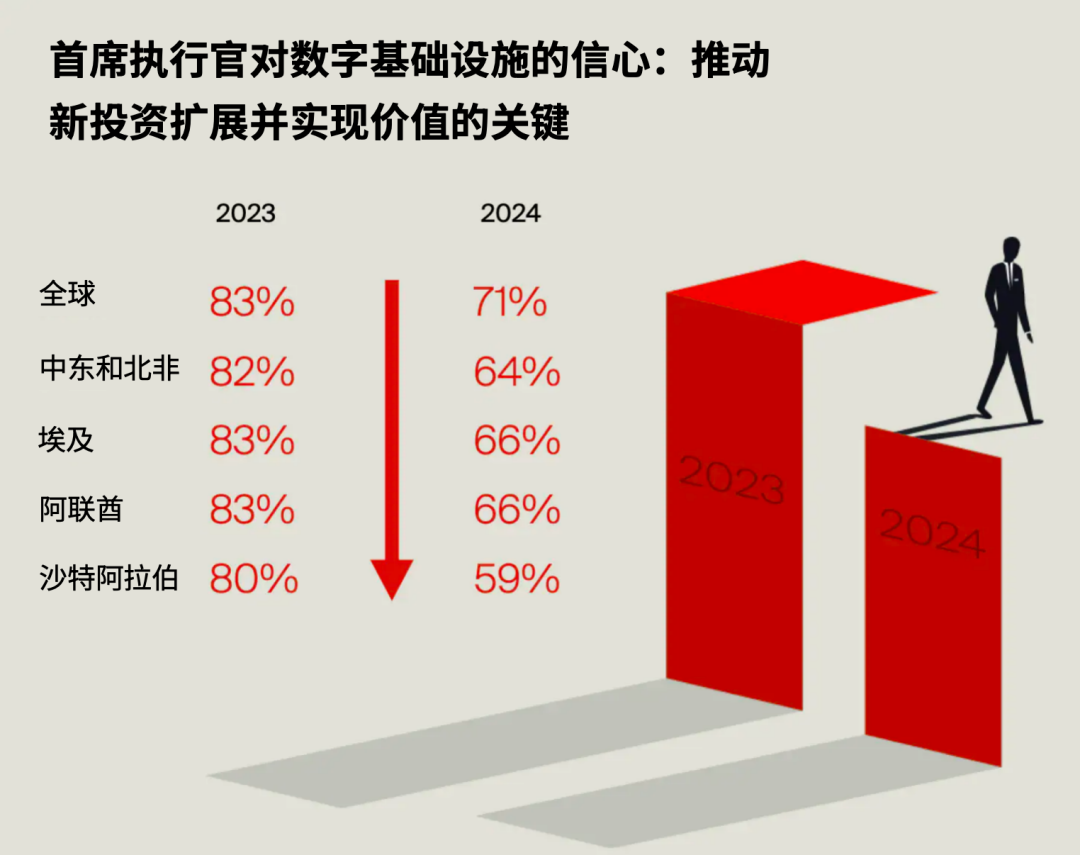

The Middle East and North Africa region is at a critical turning point. Governments across the region have unveiled ambitious AI strategies, investing in infrastructure development and nurturing innovative ecosystems. While AI adoption is accelerating, public trust in digital infrastructure, meanwhile, is waning. CEO confidence in the Middle East and North Africa has dropped from 82% to 64% over the past year.

The adoption of artificial intelligence is accelerating, yet public trust in digital infrastructure is declining.

Image source: "AI Advantages in the Middle East and North Africa Region: Opportunities for Leapfrogging and Leadership," jointly released by E& Group and IBM

This highlights the urgent need to shift from isolated legacy systems to a scalable, AI-ready data architecture. For instance, a unified data model offers a clear path forward, enabling organizations to share insights across borders while safeguarding privacy and ownership rights. To ensure the success of such models, interoperability, awareness of local cultural nuances, and compliance must be integrated into the system architecture right from the design stage.

Trust is built through design, not assumed.

Our research also reveals that data privacy (48%) and regulatory compliance (43%) are the biggest barriers to CEOs in the Middle East and North Africa region adopting generative AI. These concerns are far from unfounded. As AI continues to deepen its integration across public services, finance, healthcare, and education, "trust" is becoming increasingly critical. Fragmented regulations, outdated governance frameworks, and escalating cybersecurity threats could all hinder progress—and erode public confidence.

For this very reason, responsible AI must be integrated into system design from the outset—rather than being added later as a mere afterthought or remedial measure. Today, the ability to control, safeguard, and manage a nation’s data assets ethically has become a strategic core issue for countries worldwide. Adaptive governance frameworks, real-time monitoring and auditing, and employee training are all critical components in this effort. In some cases, nations may even strive for greater digital sovereignty—to ensure local control over vital data infrastructure, AI models, and decision-making processes—aligning these capabilities with national values, regulations, and long-term strategic interests.

Whether through a sovereignty model, regional partnerships, or an adaptive governance framework, the goal remains the same: to build AI systems that are trustworthy, ethical, and inclusive.

The Power of Strategic Public-Private Partnerships

No single player can build inclusive AI on their own. To make meaningful progress in this area, we must rely on a collaborative ecosystem—and public-private partnerships are essential for scaling responsible innovation. Our collaboration with the World Economic Forum’s Edison Alliance has enabled us to rapidly amplify our impact. The Edison Alliance alone has already empowered over 30 million people by strengthening connectivity and advancing digital financial tools, surpassing our impact commitments by an entire year ahead of schedule.

By helping people connect to the internet and participate in the digital economy, we also ensure that their voices are incorporated into the datasets and systems used to train artificial intelligence. After all, when individuals are connected, statistically represented, and contextually presented, the resulting AI systems are bound to become fairer and more effective.

A robust investment data foundation is essential.

Today, for CEOs in the Middle East and North Africa region, modernizing data architecture is more critical than generative AI itself. This is because the true power of AI depends on the quality and accessibility of the data it can access. Poor-quality, fragmented, incomplete, or biased data not only lead to flawed decision-making and unreliable automation but also exacerbate inequality and systemic risks.

CEOs in the Middle East and North Africa region have recognized the urgency of this issue. Around 46% of leaders in the MENA region believe that modernizing data architecture—such as data structures and data grids—is a critical driver over the next three years. However, mere investment isn’t enough. Companies must integrate their data strategies into daily workflows, establish cross-departmental collaboration mechanisms, and foster an organizational culture that prioritizes transparency and accountability.

PwC states that a governance-driven, unified enterprise-wide data strategy is key to unlocking the full potential of artificial intelligence at scale. It also offers the flexibility needed to adapt to emerging compliance standards across different jurisdictions.

Inclusive AI must be purposeful.

The future leaders will be those who invest early—and wisely—in inclusive technology infrastructure. This isn’t just a matter of technical design; it’s fundamentally a question of responsibility. We must build AI systems that serve everyone, not just the "connected." Today, nearly 2.6 billion people remain "offline"—their voices go unheard, their experiences remain unknown, and their perspectives aren’t even factored into the data shaping the technologies of tomorrow.

Looking ahead, our shared responsibility goes beyond simply asking "How do we use AI"—it also requires us to ask: "Whose reality does our AI truly reflect?"

If we want artificial intelligence to truly serve all of humanity, we must build it carefully and explicitly embed inclusivity into its core system from the very beginning. Only then can AI genuinely represent each and every one of us.

The above content represents the author's personal views only.This article is translated from the World Economic Forum's Agenda blog; the Chinese version is for reference purposes only.Feel free to share this in your WeChat Moments; please leave a comment at the end of the post or on our official account if you’d like to republish.

Translated by: Wu Yimeng | Edited by: Wang Can

The World Economic Forum is an independent and neutral platform dedicated to bringing together diverse perspectives to discuss critical global, regional, and industry-specific issues.

Follow us on Weibo, WeChat Video Accounts, Douyin, and Xiaohongshu!

"World Economic Forum"